Chapter 4 Notes

Gridworld (Example 3.5) Solved by Policy Evaluation (Prediction) for

Repeat gridworld example but use policy evaluation (prediction) for instead of solving Bellman's equations as a linear system of equations. (See original code here.)

xxxxxxxxxx% ... reuse code that sets up p(s',r|s,a) from Chapter 3% Inplace policy evaluation (prediction) for v_pinum_iter = 100; % Maximum number of iterationsv = zeros(25,1); % Initial value functionfor iter = 1:num_iter vold = v; % Save to test for convergence for i=1:5 for j=1:5 s = (i-1)*5 + j; vs = 0; % Accumulator for inplace computation for a=1:4 for ip=1:5 for jp=1:5 sp = (ip-1)*5 + jp; for r=1:4 % Compute expectation suggested by Bellman's equation vs = vs + 0.25 * p(sp,r,s,a) * (R(r) + gamma*v(sp)); end end end end v(s) = vs; % Inplace assignment end end err = norm(v-vold,1); % Compute size of update fprintf('iter = %3d, |v-vold| = %10.8f\n',iter,err); if(err < 0.01) % Test for termination break; endendHere is the output:

xxxxxxxxxxiter = 1, |v-vold| = 27.62459555iter = 2, |v-vold| = 10.16786506iter = 3, |v-vold| = 5.71330933iter = 4, |v-vold| = 3.29504090iter = 5, |v-vold| = 1.96247912iter = 6, |v-vold| = 1.22910789iter = 7, |v-vold| = 0.83593787iter = 8, |v-vold| = 0.65833058iter = 9, |v-vold| = 0.55351745iter = 10, |v-vold| = 0.48344337iter = 11, |v-vold| = 0.43265023iter = 12, |v-vold| = 0.37922873iter = 13, |v-vold| = 0.32782581iter = 14, |v-vold| = 0.28069078iter = 15, |v-vold| = 0.23871155iter = 16, |v-vold| = 0.20202362iter = 17, |v-vold| = 0.17036710iter = 18, |v-vold| = 0.14329432iter = 19, |v-vold| = 0.12028819iter = 20, |v-vold| = 0.10082764iter = 21, |v-vold| = 0.08442181iter = 22, |v-vold| = 0.07062582iter = 23, |v-vold| = 0.05904626iter = 24, |v-vold| = 0.04934076iter = 25, |v-vold| = 0.04121476iter = 26, |v-vold| = 0.03441677iter = 27, |v-vold| = 0.02873334iter = 28, |v-vold| = 0.02398405iter = 29, |v-vold| = 0.02001685iter = 30, |v-vold| = 0.01670394iter = 31, |v-vold| = 0.01393805iter = 32, |v-vold| = 0.01162928iter = 33, |v-vold| = 0.00970236

This gives the same result for as solving Bellman's equations as a linaer system.

Gridworld (Example 3.5) Solved by Policy Evaluation (Prediction) for

Repeat gridworld example but use policy evaluation (prediction) for .

xxxxxxxxxx% ... reuse code that sets up p(s',r|s,a) from Chapter 3% Inplace policy evaluation (prediction) for q_pinum_iter = 100; % Maximum number of iterationsq = zeros(25,4); % Initial value functionfor iter = 1:num_iter qold = q; % Save to test for convergence for i=1:5 for j=1:5 s = (i-1)*5 + j; for a=1:4 qsa = 0; % Accumulator for inplace computation for ip=1:5 for jp=1:5 sp = (ip-1)*5 + jp; for r=1:4 % Compute expectation suggested by Bellman's equation qsa = qsa + p(sp,r,s,a)*(R(r) + gamma*0.25*sum(q(sp,:))); end end end q(s,a) = qsa; % Inplace assignment end end end err = sum(sum(abs(q-qold))); % Compute size of update fprintf('iter = %3d, |q-qold| = %10.8f\n',iter,err); if(err < 0.01) % Test for termination break; endendHere is the output:

xxxxxxxxxxiter = 1, |q-qold| = 123.36041637iter = 2, |q-qold| = 53.58055428iter = 3, |q-qold| = 26.93173329iter = 4, |q-qold| = 14.48878524iter = 5, |q-qold| = 7.97903765iter = 6, |q-qold| = 4.81052880iter = 7, |q-qold| = 3.27817039iter = 8, |q-qold| = 2.64359683iter = 9, |q-qold| = 2.34263687iter = 10, |q-qold| = 2.05074371iter = 11, |q-qold| = 1.74947287iter = 12, |q-qold| = 1.46812616iter = 13, |q-qold| = 1.21869089iter = 14, |q-qold| = 1.00418227iter = 15, |q-qold| = 0.82320700iter = 16, |q-qold| = 0.67242938iter = 17, |q-qold| = 0.54787302iter = 18, |q-qold| = 0.44557900iter = 19, |q-qold| = 0.36191219iter = 20, |q-qold| = 0.29367901iter = 21, |q-qold| = 0.23814762iter = 22, |q-qold| = 0.19302073iter = 23, |q-qold| = 0.15638828iter = 24, |q-qold| = 0.12667448iter = 25, |q-qold| = 0.10258628iter = 26, |q-qold| = 0.08306671iter = 27, |q-qold| = 0.06725406iter = 28, |q-qold| = 0.05444723iter = 29, |q-qold| = 0.04407654iter = 30, |q-qold| = 0.03567962iter = 31, |q-qold| = 0.02888143iter = 32, |q-qold| = 0.02337794iter = 33, |q-qold| = 0.01892282iter = 34, |q-qold| = 0.01531649iter = 35, |q-qold| = 0.01239732iter = 36, |q-qold| = 0.01003444iter = 37, |q-qold| = 0.00812186

Here is the solution.

| s | ||||

|---|---|---|---|---|

| 1 | 1.9786 | 1.3699 | 7.9108 | 1.9785 |

| 2 | 8.7897 | 8.7897 | 8.7897 | 8.7897 |

| 3 | 2.9853 | 2.0255 | 4.7905 | 7.9107 |

| 4 | 5.3227 | 5.3227 | 5.3227 | 5.3227 |

| 5 | 0.3434 | 0.4930 | 0.3433 | 4.7905 |

| 6 | 2.9785 | 0.0462 | 2.6935 | 0.3698 |

| 7 | 7.9107 | 0.6648 | 2.0255 | 1.3698 |

| 8 | 3.9852 | 0.6062 | 1.7172 | 2.6934 |

| 9 | 4.7905 | 0.3227 | 0.4930 | 2.0255 |

| 10 | 1.3433 | -0.3625 | -0.5070 | 1.7171 |

| 11 | 1.3698 | -0.8758 | 0.6648 | -0.9539 |

| 12 | 2.6934 | -0.3916 | 0.6062 | 0.0461 |

| 13 | 2.0255 | -0.3190 | 0.3227 | 0.6647 |

| 14 | 1.7171 | -0.5267 | -0.3625 | 0.6061 |

| 15 | 0.4929 | -1.0645 | -1.3625 | 0.3227 |

| 16 | 0.0461 | -1.6715 | -0.3916 | -1.8759 |

| 17 | 0.6647 | -1.2103 | -0.3190 | -0.8759 |

| 18 | 0.6061 | -1.1060 | -0.5267 | -0.3916 |

| 19 | 0.3227 | -1.2803 | -1.0645 | -0.3191 |

| 20 | -0.3626 | -1.7774 | -2.0645 | -0.5268 |

| 21 | -0.8759 | -2.6716 | -1.2103 | -2.6716 |

| 22 | -0.3916 | -2.2104 | -1.1060 | -1.6716 |

| 23 | -0.3191 | -2.1060 | -1.2803 | -1.2104 |

| 24 | -0.5268 | -2.2803 | -1.7774 | -1.1061 |

| 25 | -1.0645 | -2.7774 | -2.7774 | -1.2804 |

Does the policy derived from agree with the policy derived from ?

Which is simpler to for policy determination: or ?

Do the solutions agree mathematically? Do some spot checking using hand calculations.

Policy Improvement

Background

= expected return starting in state and following policy

= expected return taking action from state and following policy thereafter

, ( is average/expectation of )

lets us examine the value of taking specific action out of a state

Goal

Policy improvement: Adapt policy to put more probability on more valuable actions

Deterministic Policies

Policy is a conditional probability density function .

A stochastic policy places non-zero probability mass on multiple actions.

A deterministic places all probability mass on one action.

Decision function is defined as

For a deterministic policy, is the action chosen by the policy with probability one,

For a deterministic policy, we have the simple state-value/action-value relation,

For some other deterministic policy , is the value of following deterministic policy right now in state and hereafter following deterministic policy .

Policy Improvement

Policy is better than policy if for all .

For deterministic policies, we need for all .

Policy Improvement Theorem (Deterministic Policies)

Given two deterministic policies and that choose different actions in one state, suppose that

This states that it is better to follow policy in state and thereafter follow policy . By repeatedly applying this decision, we can show that it is always better to follow policy than in the sense that for all .

This is proved by repeatedly appling the superiority of over in state and the expansion formula

which is true for all policies (stochastic or deterinistic).

We have shown that is better than .

Policy improvement step: Define a new greedy policy for which for all states.

Suppose as good as but not better than . Then it is optimal .

Homework: Prove the policy improvement theorem in the stochastic case.

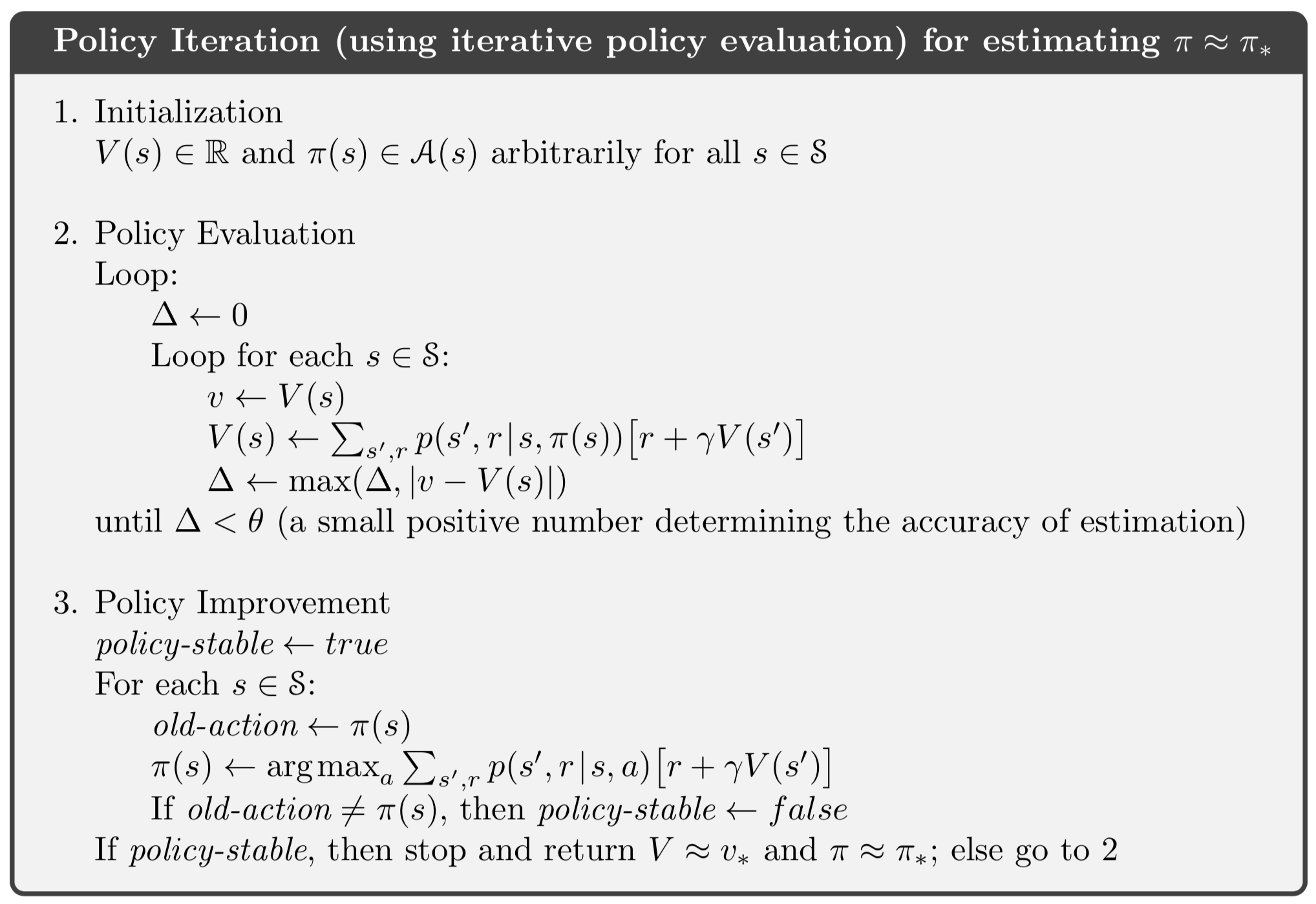

Policy Iteration

Obtain a sequence of monotonically improving policy and value functions by iterating policy evaluation (prediction) and policy improvement (greedy action selection).

Must converge in a finite number of iterations.

Homework: Modify codes given (or write code from scratch) to implement policy iteration for the grid world example 3.5 and compare your results to those in example 3.8 on page 65.